Cardiovascular disease is the leading cause of mortality worldwide. Early recognition and management of symptoms are crucial for improving outcomes. Approximately 70% of patients seek health information from search engines before consulting medical professionals.1 Chat generative pretrained transfomer (ChatGPT), a dialogue-based artificial intelligence (AI) language model, was launched in November 2022, attracting widespread attention in the scientific community.2 Microsoft's Bing-Chat, an AI-based chatbot that provides conversational assistance based on GPT-4, with access to real-time web searches (WSa-GPT), was released on February 8, 2023.3 WSa-GPT uses natural language and deep learning algorithms to provide responses in the form of natural conversations. Although chatbots like ChatGPT have been shown to provide mostly accurate answers to basic questions related to cardiovascular disease prevention4 and patient queries, and is also able to write discharge reports,5 there is a need to assess their safety in aiding patients who consult them. The aim of this simulation study was to qualitatively evaluate the feasibility and accuracy of a WSa-GPT chatbot in providing cardiology-related assistance for common and significant cardiovascular conditions.

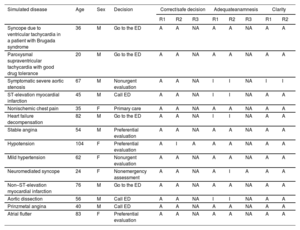

This study was conducted during the week of February 13 to 17, shortly after the launch of this WSa-GPT chatbot. We tested various prompts until we found one that effectively served as a health assistant. One cardiologist simulated 14 patients, based on experiences using a freestyle-like conversation that covered common and significant cardiovascular symptoms as well as emergent or banal conditions (table 1). The conversations were recorded, and 2 independent cardiologists assessed (as “appropriate” or “inappropriate”) whether the anamnesis was thorough and relevant (matching the symptoms and responses, collecting relevant health history, symptoms, and risk factors in line with clinical guidelines). The 2 independent cardiologists also assessed whether the final decision was safe for the patient, and whether the responses were clear and easy to understand. Discrepancies were resolved by the intervention of a third independent cardiologist. As no real patients were involved, there was no requirement for ethics approval.

Patient characteristics, decision, and assessment.

| Simulated disease | Age | Sex | Decision | Correct/safe decision | Adequateanamnesis | Clarity | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| R1 | R2 | R3 | R1 | R2 | R3 | R1 | R2 | ||||

| Syncope due to ventricular tachycardia in a patient with Brugada syndrome | 36 | M | Go to the ED | A | A | NA | A | A | NA | A | A |

| Paroxysmal supraventricular tachycardia with good drug tolerance | 20 | M | Go to the ED | A | A | NA | A | A | NA | A | A |

| Symptomatic severe aortic stenosis | 67 | M | Nonurgent evaluation | A | A | NA | I | I | NA | I | I |

| ST-elevation myocardial infarction | 45 | M | Call ED | A | A | NA | I | I | NA | A | A |

| Nonischemic chest pain | 35 | F | Primary care | A | A | NA | A | A | NA | A | A |

| Heart failure decompensation | 82 | M | Go to the ED | A | A | NA | I | I | NA | A | A |

| Stable angina | 54 | M | Preferential evaluation | A | A | NA | A | A | NA | A | A |

| Hypotension | 104 | F | Preferential evaluation | A | I | A | A | A | NA | A | A |

| Mild hypertension | 62 | F | Nonurgent evaluation | A | A | NA | A | A | NA | A | A |

| Neuromediated syncope | 24 | F | Nonemergency assessment | A | A | NA | A | I | A | A | A |

| Non–ST-elevation myocardial infarction | 76 | M | Go to the ED | A | A | NA | A | A | NA | A | A |

| Aortic dissection | 56 | M | Call ED | A | A | NA | I | I | NA | A | A |

| Prinzmetal angina | 40 | M | Call ED | A | A | NA | A | A | NA | A | A |

| Atrial flutter | 83 | F | Preferential evaluation | A | A | NA | A | A | NA | A | A |

A, appropriate; ED, emergency department; F, female; I, inappropriate; M, male; NA, not available; R1, reviewer 1; R2, reviewer 2; R3, reviewer.

Simulated patients were predominantly male (64.3%) with a median age (percentile 25-75) of 54 [36-73] years. A decision was reached after a median of 23 [18-29] messages. WSa-GPT responses to all simulated cases (100%) were rated as “appropriate” for a correct and safe final decision. Additionally, 13 cases (93%) were deemed “appropriate” for clarity and ease of understanding, and 10 cases (71%) as “appropriate” for anamnesis (table 1). Two discrepancies were resolved as “appropriate” by the third cardiologist. Each simulated conversation can be found in videos 1 to 14 of the supplementary data.

This exploratory study found that the WSa-GPT chatbot provided clear and appropriate advice across a range of simulated cardiovascular-related health conditions. Although the anamnesis was deemed inappropriate in 5 cases (eg, in Cases 3 and 6, no questions were asked about nycturia, weight gain or paroxysmal nocturnal dyspnea), the final advice was appropriate. These findings provide further support for previous data suggesting the potential of AI-based interactive chatbots for cardiology-related assistance.4 These chatbots can deliver prompt and accurate answers to health inquiries, thereby reducing the workload of health care providers.5,6 For example, the conversation between the patient and chatbot could be transmitted as electronic messages, allowing physicians to conduct an initial clinical assessment before the patient reaches the emergency department. Implementation of such AI-based chatbots could potentially lead to cost savings in health care costs and provide support to patients in remote areas with limited access to primary care physicians.

Several limitations merit consideration. First, patient-chatbot interaction was simulated rather than using real patients seeking medical advice. However, it seems unethical to delay a patient's consultation with a health care provider simply to evaluate the safety of an AI-based chatbot. Moreover, the freestyle-like conversation used in the simulation could lead to a bias in the evaluation of the tool. Second, the sample size was small. The reason was that Microsoft's Bing chatbot was limited to 11 messages 1 week after its launch. Although we simulated the most common reasons for presenting to the emergency department with acute chest pain, other possible, less frequent, diseases were not simulated and evaluated (eg, myocarditis, pneumothorax, Boerhaave syndrome); thus the appropriateness of WSa-GPT cannot be extended to these scenarios. Third, when we used the same original prompt 3 months after conceiving the study, the conversations were no longer reproducible. Bing Chat underwent several changes, focusing more on assisted web searches. Future studies should not only concentrate on the qualitative feasibility and accuracy of AI-based chatbots but also on the reproducibility of results. Fourth, although the cardiologists simulating the patients and the cardiologists evaluating the answers differed, a small bias may have been introduced. Fourth, 64% of simulated patients were male and all were Caucasian. Further research is required to assess the safety and effectiveness of the WSa-GPT chatbot in different patients and chronic conditions, as well as its role in supporting providers with personalized care. Fifth, the length of the conversations was not measured, but they proceeded naturally with no significant delay that could influence the dialogue experience. Sixth, these promising results are limited by the use of a personalized chatbot prompt, which may not be replicable in other settings.

In conclusion, 2 independent cardiologists deemed that the WSa-GPT chatbot provided appropriate and clear advice on the urgency of requesting in-person medical evaluation in 14 simulated patient cases. However, the results were not reproducible at a later date due to several changes to the WSa-GPT chatbot engine, limiting the applicability of this tool. The reproducibility of results will be an essential criterion in assessments of future GPT4-based AI-based chatbots for their feasibility and implementation in the hospital and prehospital setting.

FUNDINGNone.

AUTHORS’ CONTRIBUTIONSAll authors have contributed significantly to the following: a) the conception and design, data acquisition, or its analysis and interpretation; b) drafting the article or critically revising its intellectual content; c) providing final approval to the version to be published; and d) agreeing to accept responsibility for all aspects of the article and to investigate and resolve any questions regarding the accuracy and veracity of any part of the work.

CONFLICTS OF INTERESTJ. Sanchis is editor-in-chief of Rev Esp Cardiol. The journal's editorial procedure to ensure impartial handling of the manuscript has been followed. P. López-Ayala has received research grants from the Swiss Heart Foundation (FF20079 and FF21103) and speakers’ honoraria from Quidel, paid to the institution, outside the submitted work. J. Boeddinghaus received research grants from the University of Basel, the University Hospital of Basel, the Division of Internal Medicine, the Swiss Academy of Medical Sciences, the Gottfried and Julia Bangerter-Rhyner Foundation, the Swiss National Science Foundation (P500PM_206636), and speaker honoraria from Siemens, Roche Diagnostics, Ortho Clinical Diagnostics, and Quidel Corporation. C. Mueller has received research grants from the Swiss National Science Foundation, the Swiss Heart Foundation, the KTI, the European Union, the University Basel, the University Hospital Basel, Abbott, Astra Zeneca, Beckman Coulter, BRAHMS, Idorsia, Novartis, Ortho Clinical Diagnostics, Quidel, Roche, Siemens, Singulex, and Sphingotec, as well as speaker/consulting honoraria from Astra Zeneca, Bayer, Boehringer Ingelheim, BMS, Daiichi Sankyo, Idorsia, Osler, Novartis, Roche, Sanofi, Siemens, and Singulex, all paid to the institution. The remaining authors have nothing to disclose.