Systematic reviews represent a specific type of medical research in which the units of analysis are the original primary studies. They are essential tools in synthesizing available scientific information, increasing the validity of the conclusions of primary studies, and identifying areas for future research. They are also indispensable for the practice of evidence-based medicine and the medical decision-making process. However, conducting high quality systematic reviews is not easy and they can sometimes be difficult to interpret. This special article presents the rationale for carrying out and interpreting systematic reviews and uses a hypothetical example to draw attention to key-points.

Keywords

.

INTRODUCTIONIt has been a tiring week. You sit down to look back calmly on the decisions you’ve made. These included requesting an operation for a patient with three-vessel disease, deciding whether to treat an 82 year old patient with inferior infarction of 70min duration with fibrinolytic therapy or move her to your center for primary angioplasty, and deciding on anticoagulation treatment for an outpatient with atrial fibrillation.

Although you are reasonably sure your decisions were based on the best available evidence, you have some lingering doubts. Perhaps studies have been published which could lead your decisions to be questioned? Or perhaps different studies of the same intervention have produced different results? It's true that you have not had much time for reading over the past few months. To quickly clear up your doubts, you realize you need a concise, current, and rigorous summary of the best available evidence regarding the decisions you had to take. In other words, you need a systematic review (SR).1

SRs are considered to be the most reliable source in informing medical decision-making,2 which may explain their increasing popularity and the large rise in the number of SRs published in recent years.2 However, performing a high-quality SR is not easy. There are rules governing the way they should be carried out and, as with other designs, recommendations on how results should be presented. These quality control guidelines have been developed by international, multidisciplinary groups of experts which include authors of SRs, methodologists, clinicians, and editors.2, 3, 4 This article presents the underlying rationale for performing and interpreting SRs and uses a hypothetical example to highlight key-points in their execution.

CONCEPT AND NOMENCLATURESRs are scientific investigations in which the unit of analysis is the original primary studies. These are used to answer a clearly formulated question of interest using a systematic and explicit process. For that reason, SRs are considered to be secondary research (“research-based research”). On the other hand, reviews which do not follow a systematic process (narrative reviews) cannot be considered to constitute a formal research process, but are simply a type of scientific literature based primarily on opinion.

From a formal point of view, SRs summarize the results of primary research using strategies to limit bias and random error.5 These strategies include:

• Systematic and exhaustive searching for all potentially relevant articles.

• The use of explicit and reproducible criteria to select articles which are eventually included in the review.1

• Describing the design and implementation of the original studies, synthesizing the data, and interpreting the results.

Although SRs are a tool for synthesizing information, it is not always possible to present the results of the primary studies briefly. When results are not combined statistically, the SR is called a qualitative review. In contrast, a quantitative SR, or meta-analysis (MA) is an SR which uses statistical methods to combine the results of two or more studies.1

An SR should not to be confused with an MA. The first is always possible, while the second is only sometimes possible. However, when conditions allow, MAs provide very useful, manageable information regarding the effect of a treatment or intervention, both in general and in specific patient groups. In addition, MAs make it possible to estimate the effect of an intervention more precisely and to detect moderate but clinically important effects that may have gone undetected in the primary studies. Typically, MAs combine aggregate data from published studies, but sometimes individual data from patients in different studies can be combined. This is called individual patient data meta-analysis and is considered the gold standard in SR.6

It should be noted that, in contrast to narrative reviews, SRs use a systematic method to search for all potentially relevant studies and apply explicit, reproducible, previously defined criteria to select the articles included in the final review. It is these features which give SRs their scientific character, in contrast to narrative reviews. Table 1 shows the difference between the two types of review.

Table 1. Differences Between Systematic and Narrative Reviews.

| Characteristic | Narrative review | Systematic review |

| Question of interest | Not structured, not specific | Structured question, well-defined clinical problem |

| Article search and sources | Not detailed and not systematic | Structured and explicit search |

| Selection of articles of interest | Not detailed and not reproducible | Selection based on explicit criteria uniformly applied to all articles |

| Assessing the quality of the information | Absent | Structured and explicit |

| Synthesis | Often a qualitative summary | Qualitative and quantitative summary |

| Inferences | Sometimes evidence-based | Normally evidence-based |

As in clinical trials, a protocol should be developed prior to carrying out an SR.7 This will help the researchers to give due consideration to the most appropriate methods for use in the review and will also prevent decisions being taken a posteriori based on the results. The first international register of protocols for systematic reviews, apart from the Cochrane SRs, was recently published under the name of PROSPERO (http://www.crd.york.ac.uk/prospero/).

STAGES IN A SYSTEMATIC REVIEWBriefly, a SR consists of the following steps:

• Definition of the clinical question of interest and the inclusion and exclusion criteria for studies.

• Identification and selection of relevant studies.

• Extraction of data from primary studies.

• Analysis and presentation of results.

• Interpretation of results.

The first step is to correctly formulate the clinical question of interest. In general, this should be explicit and structured so as to include the following key components:8

• The specific population and context. For example, elderly patients (over 75 years) admitted for acute myocardial infarction with ST elevation.

• The exposure of interest. This could be a risk factor, a prognostic factor, an intervention or treatment, or a diagnostic test. In the case of an intervention, treatment or diagnostic test a control exposure is usually defined at the same time. For example, primary angioplasty (intervention) versus fibrinolysis (control).

• Events of interest. For example, total mortality, cardiovascular mortality, readmission for acute coronary syndrome, revascularizations, etc.

From these elements, you might frame the question as follows: compared with fibrinolysis, does primary angioplasty reduce mortality and myocardial infarction in patients over 75 years of age? Once the question of interest has been defined and circumscribed, it is easier to establish the inclusion and exclusion criteria for primary studies. An ill-defined research question, on the other hand, leads to confused decision-making about which studies may be relevant in answering the question.

In many cases not easy to decide what the specific research question should be. It is however clear that it should be clinically relevant. If questions are too vague (e.g. is primary angioplasty useful in acute myocardial infarction?), they will be of little help to the clinician when making a decision about a particular patient. Exposures or patient characteristics which may affect the event of interest should also be taken into account. For example, it is not uncommon for patients over 75 years of age to be treated with oral anticoagulation, which could affect the expected event of interest. The study population could therefore be restricted to patients who are not receiving oral anticoagulation. However, overly specific inclusion criteria may limit the applicability of the results. Another option is to define a broad question that makes clinical sense and go on from there to explore more specific questions. For example, we could include all patients with acute myocardial infarction and then use exploratory analysis to determine the effect of the intervention in those treated with oral anticoagulants and those who are not. However, this strategy can lead to problems which are similar to those found in subgroup analysis.9 Finally, using inclusion criteria which are too broad means running the risk that the analysis will make no real clinical or biological sense.10

At this stage, it is important to decide which study designs to include in the review. The decision depends on the type of question we are trying to answer. If the idea is to evaluate the efficacy of an intervention, as in the previous example, we clearly need to include randomized clinical trials (RCT), if any are available. The same is true for the assessment of the reliability and safety of a diagnostic test. For SRs of community or public health interventions or when interventions are assessed over the long-term (particularly as regards their safety), then observational studies are more relevant. Occasionally, we may find that there are no RCTs available on a given intervention and, in that case as well, we will need to analyze observational studies.

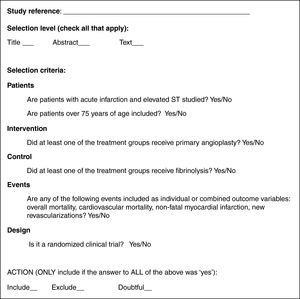

Figure 1 shows, in simplified form, the elements of the question of interest in our example and the inclusion and exclusion criteria for the SR deriving from it.

Figure 1. Example of the elements making up the question of interest for a systematic review, and inclusion and exclusion criteria for the primary studies.

Identifying and Selecting StudiesThis stage consists of several parts:

1. Identifying potential articles

• Deciding on restrictions regarding the publication language.

• Deciding on the sources for obtaining primary studies.

• Obtaining the titles and abstracts of potential primary studies.

2. Selecting potential articles

• Applying the inclusion and exclusion criteria to the titles and abstracts obtained.

• Obtaining potential articles based on eligible titles and abstracts and applying the inclusion and exclusion criteria.

• Evaluating the level of agreement in study selection.

As with any research study, the presence of errors in data extraction can invalidate the results of a SR. So as to minimize random error and bias, it is extremely important to obtain as many primary studies as possible on the question of interest. If studies are omitted, it can introduce bias if the final sample of selected articles is not representative. Two decisions need to be made at this point: firstly, whether to restrict the language of publication and whether to include studies which are not published in medical journals.

With regard to language, for practical purposes only publications in English and the native language of the author of the SR are usually included. Nevertheless, studies have shown that research quality is not necessarily related to the language of publication.11 Restricting the language of publication may also bias the results of the SR by excluding potentially relevant studies.12

For reasons of practicality, it seems reasonable to include only studies published in medical journals. One could argue that the fact that these studies have been through a peer review process makes them the most reliable.13 However, independently of quality, it is also true that studies with negative or inconclusive results are less likely to be published,14, 15 and their exclusion may bias the results of the SR. This is called publication bias and it makes it more likely that a SR which excludes unpublished studies will overestimate the relationship between exposure and the event of interest. In extreme situations, this bias can lead to completely futile treatments being presented as effective.16, 17

Once a decision has been taken on the preceding two questions, the following step is crucial: where to search for primary studies? Various strategies are available:

• Electronic databases: MEDLINE, EMBASE, CENTRAL.

• Non-indexed databases: AMED, CINAHL, BIOSIS, etc.

• Hand-searching of journal content tables, minutes and summaries of scientific meetings, and books.

• Lists of references and citations: Science Citation Index and similar.

• Records of ongoing studies (e.g., clinicaltrials.gov).

• Contact with pharmaceutical companies.

• Contact with fellow experts in the field of interest.

For obvious reasons, the most widely used strategy today is to perform the search in electronic databases. This is not, however, a simple strategy. Although there is some overlap between databases, many journals included in one database are not included in others. MEDLINE, for example, only indexes about 5600 of the more than 16 000 existing biomedical journals, and most of those are in English. EMBASE indexes over 1000 journals which are not included in MEDLINE, many of them European. Other databases further complement MEDLINE and EMBASE, as efforts have been made to register literature on unpublished studies (gray literature).18

For practical reasons, the identification of potentially eligible articles is often undertaken by identifying titles and abstracts. However, each database has a particular structure and uses more or less specific criteria for indexing and keywords. For example, in MEDLINE, the specific index of controlled vocabulary is called MeSH (Medical Subject Headings). The assistance of a documentalist who is expert in SRs is crucial at this stage. The final search strategy should also be described in the publication of the SR, to verify its reproducibility.

Depending on the topic of interest, restricting the search for studies exclusively to electronic databases may not be optimal. Sometimes it is convenient to include a complementary strategy to identify unpublished studies. This phase is one of the most laborious and often requires hand searching of journal abstracts or conference proceedings, contact with experts in the field or with pharmaceutical companies, etc. Clearly, this involves a significant investment of time and financial resources. Recognizing this, the Cochrane Collaboration has led an international initiative to develop a register of controlled trials, formerly known as the Cochrane Controlled Trials Register, and now called CENTRAL.19 This resource, which is constantly updated, contains hundreds of thousands of records or citations of studies published in indexed journals as well as supplements (usually conference proceedings) and is of undoubted interest in identifying RCTs.20

Applying search strategies in several electronic databases usually generates a large number of references many of which are likely to be duplicated between databases. It is therefore useful at this stage to use software for the automated management of bibliographic citations, such as ProCite or Reference Manager.

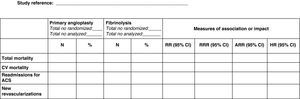

Selection of Potential ArticlesOnce we have a first list of titles and abstracts, we need to make an initial selection or screening of potentially eligible articles. A study selection form based on explicit, understandable criteria is useful at this stage.21 A simple example for the research question referred to above is shown in Figure 2. The selection process usually begins with a review of titles and abstracts; if there are doubts about the suitability of an article, the full text should be reviewed.

Figure 2. Hypothetical example of a form for selecting potential articles for a systematic review.

In order to increase the reliability and thoroughness of the process, study selection should be carried out by two independent reviewers. It is equally important to measure the degree of agreement between reviewers by calculating the kappa statistic for each of the items on the selection form. Simply put, this statistic measures the degree of agreement between reviewers above that expected by chance.22, 23 Where reviewers disagree about whether to include an article or not, a third, senior researcher is usually appointed to act as arbitrator and to make the final decision.

Finally, it is important to note that the entire process of identifying and selecting studies should be accurately reported using a flow diagram of articles identified in each phase, articles eliminated, and the reasons for their elimination (Figure 3).

Figure 3. Example of the article selection process.

Extracting Data From Primary StudiesIn this phase, information should be extracted from each study as reliably as possible and should therefore be done in duplicate if possible. If that is not feasible, an alternative is to have another reviewer carry out an independent audit of a random sample of studies.

The information to be extracted from the primary studies should be agreed on during the design phase of the study. In general, it should be data that allows the study hypothesis to be accepted or rejected. In summary, the data extraction form usually includes:24

A. Information on patients, the intervention of interest, the control intervention, and study design.

B. Information on the results.

C. Information on the methodological quality of the study.

Point A covers all information that may impact the outcome, and may vary between studies. In our example, an RCT which included patients with a mean age of 65 years and a median door-to-balloon time of 63min would not be the same as another trial in which patient mean age was 75 years and door-to-balloon time was 96min. All of these differences could explain a difference in the magnitude of the intervention effect between studies, i.e. they could help to explain the heterogeneity of the effect. The challenge is to strike a balance between the completeness of the information collected while, at the same time, avoiding including too much unnecessary information that would overload the review.

The information in B corresponds to the extraction of results. The format chosen will depend on how the events of interest are defined: whether as a dichotomous variable or as a continuous variable. In the first case, it will sometimes be easy to get the number and percentage of patients in both branches of the study in which the event of interest occurred. On other occasions, the results may be expressed as a measure of association or impact, such as relative risk, relative risk reduction, absolute risk reduction, odds ratio or hazard ratio. Figure 4 shows an example of a hypothetical form for the extraction of results. Fortunately, it is easy to get the number and approximate percentage of patients with the event of interest from any of the usual measures of association or impact. In the event that the outcome variable is continuous, such as the ejection fraction, the information of interest is the mean and standard deviation in each treatment group.

Figure 4. Hypothetical example of a form for extracting results from studies included in a systematic review. ACS, acute coronary syndrome; ARR, absolute risk reduction; CI, confidence interval; CV, cardiovascular; HR, hazard ratio; RR, relative risk; RRR, relative risk reduction.

Finally, the data extraction form should include information on the methodological quality of the studies included, as this is closely related to the magnitude of the effect. There is controversy regarding the best way to reflect the methodological quality of a study.25 Some argue for the use of quality rating scales and several of these have been developed,26 mostly for RCTs. Some are generic and others specific to certain clinical areas. However, it has been shown that using one scale or another can lead to substantial variation in the results of an MA,27 so that none of them are totally reliable. Recently, a new system to measure the methodological quality of studies included in SRs has been developed called GRADE (Grading of Recommendations Assessment, Development, and Evaluation).28 This system, which was developed and agreed upon by a group of international leaders in the implementation of clinical practice guidelines, offers some interesting advantages over the others. Basically:

• The quality of the evidence, which is classified as high, moderate, low and very low, is reported separately from the grade of recommendation (strong or weak recommendation).

• The values and preferences of patients are recognized and incorporated.

• It provides a clear and pragmatic level of recommendation (strong or weak) for clinicians, patients and managers.

• It explicitly assesses the importance to patients of the outcome variables for the therapeutic alternatives considered

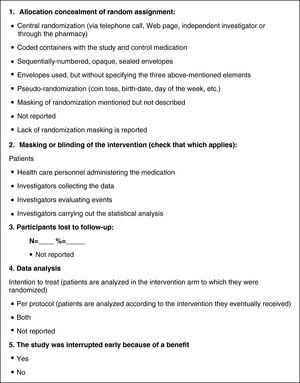

Some authors advocate collecting and evaluating the methodological elements of each individual study, instead of using the controversial scales.29 The information collected will depend on the study design. In the case of RCTs, which are the most common design in reviews of interventions, aspects of design and implementation most frequently related to the risk of bias are: the way randomization is concealed, the method of masking interventions, tracking losses, the type of analysis, or early termination of the trial because of an apparent benefit. Figure 5 shows a hypothetical example of a data collection form for methodological elements of RCTs.

Figure 5. Hypothetical example of a form to assess the quality of randomized clinical trials included in a systematic review.

Analysis and Presentation of ResultsSummarizing and presenting the results of primary studies obtained using a systematic, reproducible methodology constitutes, in itself, a qualitative SR. The next step is to combine the results of primary studies using statistical methods, i.e., meta-analysis (MA).

Conceptually, MAs are used to combine the results of two or more similar studies on a particular intervention, as long as the same outcome variables are used. Meta-analysis does not provide a simple arithmetic average of the results of different studies, but a weighted average. In other words, it gives greater weight to studies with a larger information load, i.e. studies that are larger and / or have a higher number of events. On combining the results, a different weight is assigned to each study and it is that which provides the weighted average. Because MA also takes into account intra- and inter-study variability when combining results, the validity of the conclusions is further enhanced. If there is a large amount of variation between the results of the studies included (heterogeneity) it may not be appropriate to combine the results statistically. In that case, only the results and the characteristics of individual studies should be presented, without further statistical treatment. It can be useful to present the results graphically and the study characteristics in tables.

Basically, two types of models can be used to statistically combine results, those being fixed effects models and random effects models. The first assumes that the treatment effect is constant in all studies, while the second assumes that the effect is randomly distributed among the different studies. In other words, the fixed effects model assumes that there is only one source of variability in the results (the study), while the random effects model introduces a second source of variation between studies. The practical consequence of this is that the random effects model tends to produce more conservative estimates (wider confidence intervals) of the combined effect. Which model we use depends on our view of similarities and differences between the studies to be merged, although it is common to use both approaches.

But what do we mean when we say that there is “heterogeneity” between studies, and how is that heterogeneity measured? Basically, it means that, after weighting the studies, the results of individual studies (the effect of the intervention) differ more than would be expected by chance. In other words, the effect of the intervention “differed” between studies either because of differences in the study design used, in the methods used to collect information, or the type of analysis used, and/or the characteristics of the study population. For example, suppose that one clinical trial of an antihypertensive drug included 70% African Americans and another study, using the same drug, included only 10%. Now imagine that the drug has a very powerful antihypertensive effect in African American populations and no effect in the rest of the population. Clearly, the first study will show the intervention has a positive effect while the second study will show it has no effect. It would not be appropriate to combine the results of the two studies because it would show a “mean” effect for the intervention which would hide a much richer and complex reality, and would lead to confusion.

Various statistical tests are available to quantify heterogeneity. The most common are the Q, H and I2 statistics. The easiest to interpret is the I2, which indicates the proportion of observed (between-study) variability in the intervention effect that is due to heterogeneity between studies, i.e. non-random variability. It is generally held that a proportion of 25% indicates that there is little heterogeneity, 50% shows moderate heterogeneity, and 75% a high degree of heterogeneity.30

The results of MAs are presented graphically using forest plots. This type of graph shows data from individual studies together with a representation of the statistical weight of each study in relation to confidence intervals and standard error of the mean. For example, suppose that in our example we combine eight studies to analyze the effect on cardiovascular mortality of angioplasty compared to fibrinolysis in patients aged over 75 years. Figure 6A presents the combined analysis of eight studies in the form of a forest plot. It can be seen that the overall effect is inconclusive and, moreover, that there is significant heterogeneity between studies (I2=90%).

Figure 6. Meta-analysis of the effect of primary angioplasty versus fibrinolysis on total mortality in patients with myocardial infarction. A, including all studies. B, analysis of subgroups based on door-to-balloon time; upper figure, studies in which mean door-to-balloon time exceeded 140min; lower figure, studies in which the mean door-to-balloon time was <90min. CI: confidence interval.

A simplistic interpretation would be that primary angioplasty is not superior to fibrinolysis in reducing cardiovascular death in patients over 75 years of age. However, the high heterogeneity between studies should make us suspect that this result is unrealistic. In fact, visually it can be seen that angioplasty showed a superior effect to fibrinolysis in studies 2, 4, 5 and 6, but was inferior in studies 1, 3, 7 and 8. A careful reading of these surveys indicates that mean door-to-balloon time was <90min in all studies in which angioplasty showed a superior effect, but that it was inferior to fibrinolysis in all studies with a door-to-balloon time over 140 mins. In this case it would be justifiable to perform a subgroup analysis in which studies with a door-to-balloon time <90min and those with longer door-to-balloon times are analyzed separately. Figure 6B shows the results. It can be seen that heterogeneity is significantly reduced in each subgroup and that the results are consistent, with primary angioplasty showing a significant benefit for studies with lower door-to-balloon times (bottom of Figure 6B) but a smaller effect than fibrinolysis in studies with longer door-to-balloon times (top of Figure 6B).

Finally, it is important to point out that guidelines and recommendations for the presentation and publication of SR are available and provide checklists of items to be covered in the publication. The MOOSE guidelines detail the specific items that SRs of observational studies should include,4 while the PRISMA guidelines (which replaced the QUORUM recommendations) cover MAs of RCTs.3 They can be consulted on-line at http://www.consort-statement.org

Interpreting the ResultsFinally, the SR ends with the interpretation of results. This includes a discussion of the limitations of the study (of the review) as well as any potential biases of the original studies, and potential biases that could affect the SR itself. It is also important to discuss the consistency of the findings and their applicability, and to propose recommendations for future research on the topic of interest.

SYSTEMATIC REVIEWS AND CLINICAL PRACTICEAs much as the findings of an RS are consistent and convincing, in the end it is the clinician who must make decisions regarding a particular patient. The findings of a SR should not be taken as fixed and unchanging standards resulting from an “evidentialist orthodoxy”. In other words, we must adapt the findings of an SR to the patient, and not vice versa. In this regard, before making a decision on a patient based on an SR, we recommend making the following reflections:31

Are the Findings Applicable to My Patient?The SR has shown that primary angioplasty is superior to fibrinolysis in elderly patients. But imagine my particular patient has a condition that was an exclusion criterion in all clinical trials included in the SR, such as a creatinine clearance rate <30ml / min. In this case, my patient would not be represented by the specific clinical trials included in the SR.

Is the Intervention Feasible in My Patient?There may be regional differences in the availability and/or experience of applying a particular technique, differences which should be taken into account when applying the intervention to an individual patient.

What is the Risk-Benefit for My Patient?Even if the intervention is feasible and applicable, the specific risks for a particular patient should be taken into account and this is an aspect which is generally poorly represented by clinical trials.

What Are My Patient's Particular Values and Preferences?Accustomed to making decisions based markers of myocardial necrosis, electrocardiograms, and other complementary tests, we can succumb to an overly paternalistic form of medicine, whereby we treat the patient with the best intentions, but without taking his or her point of view into account.

CONCLUSIONSSRs are an essential tool in synthesizing available scientific information; they enhance the validity of the findings from individual studies and identify areas of uncertainty where research is needed. They are essential to the practice of evidence-based medicine. However, SRs should be implemented following a strict methodology and applying quality control to avoid biased conclusions. Ultimately, it is the clinician who must make decisions regarding a particular patient; SRs are just one more tool to be used, judiciously, in making those decisions.

CONFLICTS OF INTERESTNone declared.

Corresponding author: Unidad de Epidemiología, Servicio de Cardiología, Hospital Vall d’Hebron, Pg. Vall d’Hebron 119-129, 08035 Barcelona, Spain. nacho@ferreiragonzalez.com